AI Agents vs Agentic AI

.png)

Stay ahead in support AI

Get our newest articles and field notes on autonomous support.

AI Agents vs Agentic AI: Key Differences in 2026

Vendor pitches for AI customer support solutions blur together after a while, and somewhere in each demo the terms "AI agents" and "agentic AI" get used interchangeably despite meaning fundamentally different things.

The distinction determines whether an investment automates responses or genuinely resolves customer problems, which has direct implications for headcount, satisfaction scores, and whether that shiny new platform becomes a competitive advantage or expensive shelfware.

What Are AI Agents?

AI agents are software systems that perceive their environment, make decisions, and take actions to accomplish specific tasks through predefined rules or learned behaviors, functioning as the "doers" in an automation stack.

A chatbot routing tickets based on keywords, a system retrieving order status, or an automated responder sending templated answers all qualify as AI agents. These tools prove useful within their scope, but that scope remains narrow because agents respond to triggers rather than pursuing goals. When a customer's issue requires coordinating across multiple systems or making judgment calls outside the training data, agents hit their ceiling and tickets land back in human queues.

Types of AI Agents

- Reactive agents respond to inputs with predefined rules and maintain no memory, returning the same FAQ whether a customer asks once or five times in a row.

- Model-based agents track conversation context within sessions, representing meaningful improvement for multi-turn conversations though state remains session-scoped.

- Learning agents improve through feedback loops, optimizing specific metrics over time without developing transferable problem-solving capability.

- Utility-based agents evaluate options against defined objectives, bringing more sophistication to individual decisions while still operating within their utility function's bounds.

What Is Agentic AI?

Agentic AI refers to systems capable of reasoning and acting independently to achieve long-term goals, with ability to plan, coordinate multiple processes, and adapt based on outcomes.

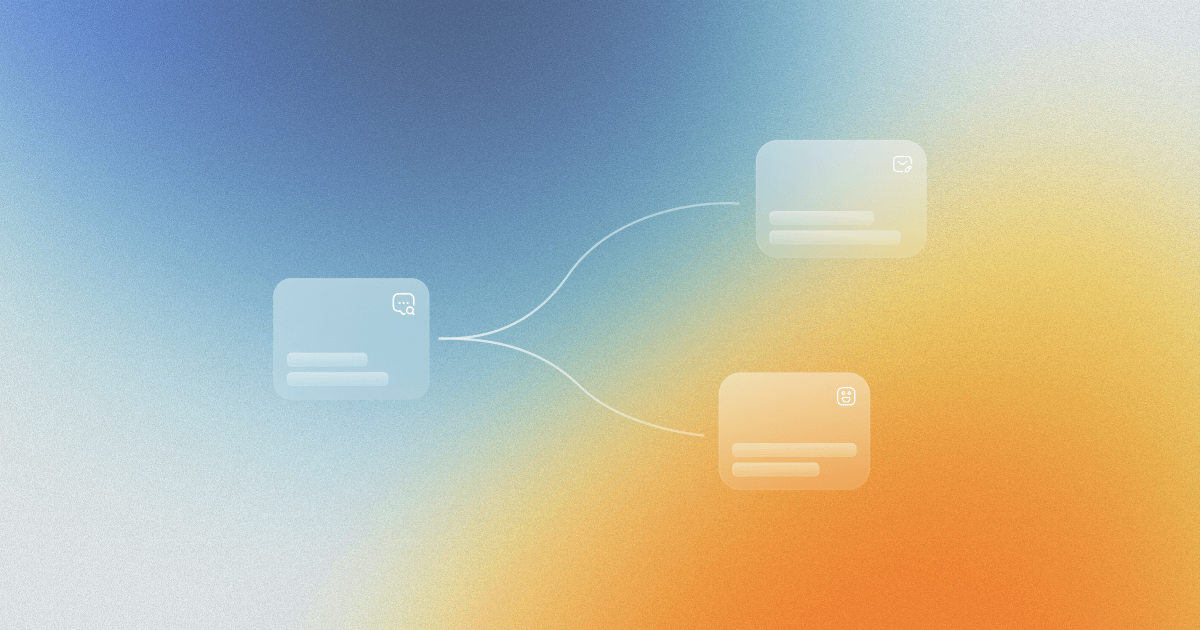

Where AI agents execute tasks, agentic AI ensures the right workers tackle the right problems in the right sequence to achieve business outcomes. When a customer needs a refund, an agentic system doesn't send a policy link and close the ticket. It verifies the purchase, checks the return window, confirms policy allows the refund, initiates the payment transaction, updates inventory, and confirms completion.

Three components separate agentic AI from agent collections.

Persistent memory maintains context across the customer relationship rather than just within conversations.

Planning modules decompose goals into action sequences rather than following fixed scripts.

Orchestration layers coordinate multiple agents through frameworks like LangGraph or CrewAI, managing handoffs and state sharing that complex workflows require.

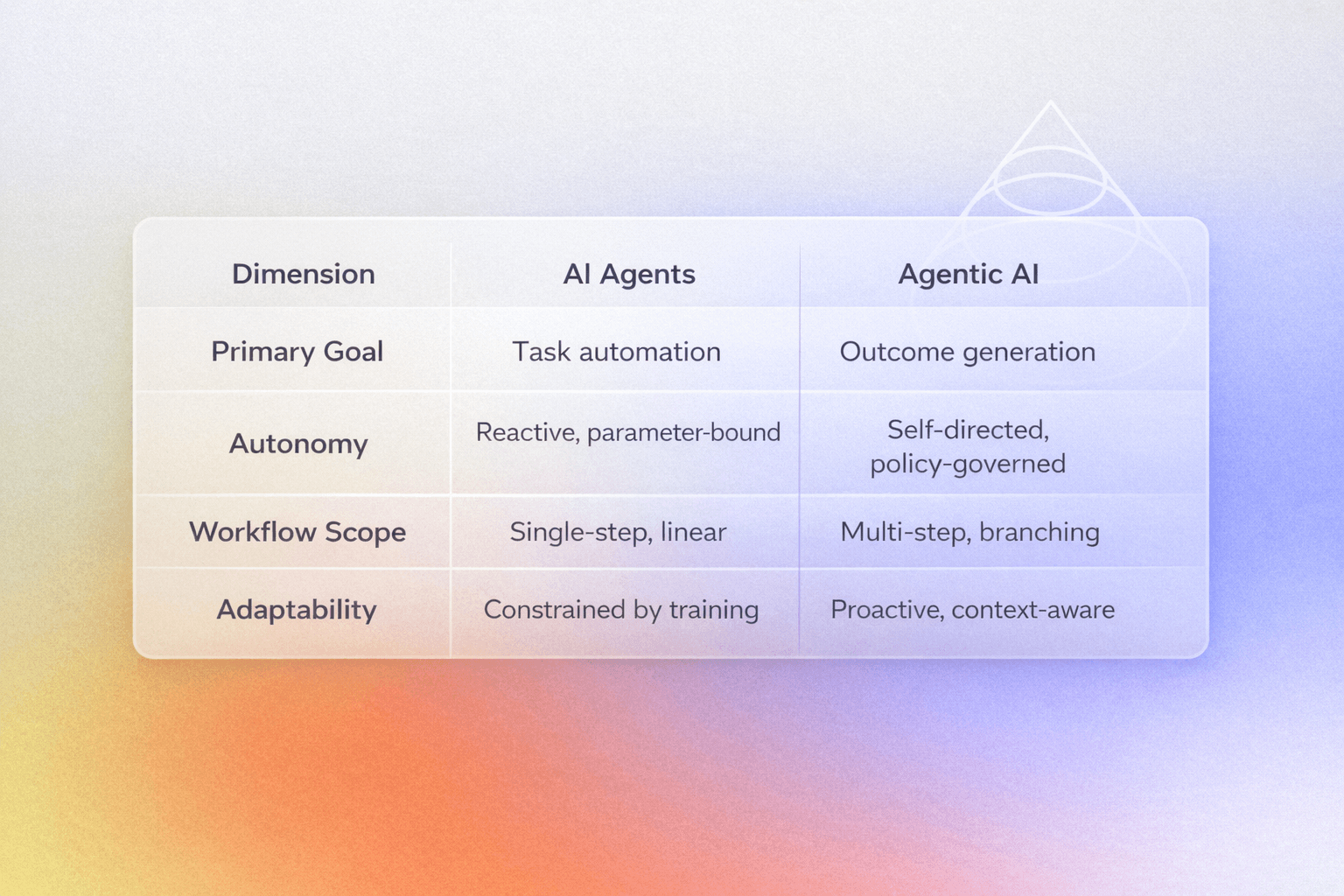

AI Agents vs Agentic AI: Key Differences

Primary Goal

Agents automate tasks like classifying tickets or sending notifications, with success measured by whether the task executed correctly. Agentic AI generates outcomes by resolving problems end-to-end, measured by whether customer needs were addressed regardless of tasks required. A refund request triggering an acknowledgment with a policy link was processed; one resulting in money back was resolved.

Autonomy

Agents operate within defined parameters and escalate at boundaries, waiting reactively for inputs. Agentic systems assess situations, determine actions, and self-correct when approaches fail, operating with "governed autonomy" where behavior is controlled via policies and permissions while decisions happen within those bounds. Agents generate escalations when situations don't fit training; agentic systems resolve within policy boundaries and escalate only when governance requires human judgment.

Workflow Scope

Agents excel at linear tasks like retrieving data or updating fields. Agentic AI manages branching workflows where next steps depend on previous outcomes. A subscription cancellation might involve checking account status, verifying identity, reviewing contract terms, calculating refunds, processing in billing systems, updating permissions, and confirming, with different paths depending on what each step reveals.

Adaptability

Agents follow training, performing well when situations match patterns and degrading when they diverge. Agentic AI anticipates needs and adjusts based on context, addressing underlying needs that might differ from what customers initially articulated.

Use Cases: When to Deploy Each Architecture

Agent-appropriate tasks include FAQ responses, ticket classification, status lookups, and appointment scheduling, where success means the specific action completed correctly rather than the underlying problem being solved.

Agentic architecture becomes necessary when resolution requires actions across multiple systems: refunds needing policy verification and payment integration, subscription cancellations with retention logic and prorated credits, returns involving partial calculations and inventory adjustments. Any workflow measured by whether the problem went away rather than whether a response was sent requires agentic capability.

Industry examples

In eCommerce AI customer support, agents answer product questions while agentic AI processes returns end-to-end.

In SaaS, agents handle password resets while agentic AI manages subscription changes with prorated billing and access updates.

When it comes to AI customer support for insurance, agents provide claim status while agentic AI manages first notice of loss through triage with policy verification, handling regulatory complexity generic platforms can't address.

Combining AI Agents and Agentic AI: A Layered Approach

Effective stacks layer both architectures rather than choosing between them. A customer asking store hours doesn't need multi-step orchestration, and a reactive agent handles that faster and cheaper. But limiting stacks to agents means the 30% of tickets driving 70% of handle time never get automated.

The practical architecture uses an intake layer with agents classifying tickets and assessing complexity, routing simple queries to specialized agents and complex cases to agentic orchestration. The resolution layer mixes both, with straightforward resolutions executing through agents while multi-step resolutions trigger agentic workflows. The learning layer tracks successes and failures, informing routing decisions and coverage expansion.

Starting with high-volume, low-complexity segments generates quick ROI while tracking what agents can't resolve defines requirements for agentic expansion.

Why This Distinction Matters for Your AI Customer Support Strategy

Deploying agents where agentic capabilities are needed creates escalation bottlenecks, with containment rates climbing while resolution stagnates and customers learning that automated support means redirection rather than help. Deploying agentic systems for simple tasks wastes resources. Getting the match right determines ROI.

Every vendor claims "AI-powered automation," making the framework valuable for probing what claims mean. Can the system coordinate multi-step workflows or does each interaction start fresh? Does it execute actions in backend systems or just read data? When they say "resolution," is the problem solved or just responded to?

Governance requirements also differ substantially. Agents need task-level guardrails specifying what not to say and when to escalate. Agentic systems need outcome-level governance with policy frameworks defining what the system decides versus what requires approval, covering refund limits, escalation triggers, and compliance requirements.

Seven Questions That Separate Real Solutions from Demo-Ready Shelfware

How easy is it to use?

Counterintuitive, but critical. If the platform feels too easy during evaluation, it probably won't handle your actual cases. Genuine workflow complexity requires genuine configuration depth. Solutions that promise "set up in minutes" typically deliver deflection in minutes and resolution never.

How does the system handle duplicate tickets?

Customers open multiple tickets about the same issue constantly. Sometimes they create separate tickets for different issues within the same session. Can the platform identify and merge duplicates automatically? Does it track context across conversations from the same customer? The inability to manage this basic reality creates noise that compounds daily.

How does it manage multiple intents in a single message?

A customer writes: "I need to return the shirt from my last order, and also my subscription payment failed." Two separate policies, two separate actions, one message. Can the system execute both flows? Platforms that handle single-intent interactions adequately often fracture when customers behave like actual humans.

Are there co-pilot features for edge cases?

No system resolves everything autonomously, and any vendor claiming otherwise is lying. The question is what happens at the boundaries. If every uncertain situation requires full human takeover, you've built an expensive routing system. Effective platforms augment agent capability rather than just escalating.

How does the agent execute back-office actions?

This question exposes the resolution-versus-deflection divide. If the system relies on free-text generation to describe what should happen next, it won't work at scale. Real resolution requires structured action execution with measurable accuracy. Ask specifically how they track whether actions completed successfully.

How does it recover from mistakes?

Every system makes errors. The revealing question is what happens next. Does the platform detect when it's gone wrong? Can it self-correct before escalation? When escalation is necessary, does the human agent receive full context or start from scratch? Error handling architecture separates production-ready systems from perpetual pilots.

What happens when you exceed stated capabilities?

Push vendors to describe failure modes explicitly. When the system encounters something outside its training, does it acknowledge limits and escalate appropriately, or does it confidently provide wrong answers? Graceful degradation matters more than peak capability.

How Notch Combines Agentic AI with Governed Autonomy

Notch's architecture demonstrates what production-grade agentic AI looks like when built specifically for customer support outcomes rather than general-purpose automation.

The platform combines over 40 specialized AI agents coordinated through connected agentic workflows, with each agent handling specific capabilities like customer identification, policy matching, requirement gathering, and action execution. The orchestration layer ensures these agents work together toward resolution rather than operating as isolated tools, which is the difference between having capabilities and delivering outcomes.

The results from this architecture speak to what becomes possible when AI owns outcomes. Notch customers achieve 77% of tickets resolved autonomously within 12 months, and that means genuinely resolved with problems fixed and customers satisfied, not just responded to with deflection tactics. One eCommerce customer hit 73% autonomous resolution with 92% faster handling times and zero additional headcount. Guardio cleared a 20,000-ticket backlog in days while reaching 87% resolution rate. These numbers reflect what happens when agentic architecture replaces agent collections.

The platform's pricing model reinforces this outcome orientation. Notch charges by resolution, meaning customers pay only for tickets fully resolved rather than just automated. That pricing structure only works when a platform has genuine confidence in outcome delivery rather than activity metrics, and the willingness to stake revenue on resolution rates reveals architectural capability that demo decks and marketing claims can't replicate.

The Future: From Isolated Agents to Orchestrated Autonomy

In the future, a move is expected from isolated AI tools toward integrated systems coordinating toward outcomes, and organizations building on agent-only architectures may face re-architecture costs as expectations shift.

Individual agents are gaining agentic traits while orchestration layers become more accessible, creating convergence that will eventually blur the distinction. The distinction matters most now, during transition, when choosing platforms with proper foundations determines which organizations ride the improvement curve and which need costly rebuilds.

The Bottom Line

The distinction between AI agents and agentic AI determines whether automation handles tasks or delivers outcomes. For support operations, this translates to deflection versus resolution, with platforms optimized for containment eventually eroding satisfaction while those engineered for genuine resolution improve it.

Evaluating platforms requires understanding which architecture underlies vendor claims and matching that to workflow complexity. The right questions focus on resolution rates, integration depth, policy governance, and outcome guarantees rather than AI capability in the abstract.

Book a demo to see how Notch's agentic architecture translates to resolution for specific workflows and use cases.

Key Takeaways

- AI agents execute specific tasks within constrained parameters, functioning as workers that handle individual assignments without broader context

- Agentic AI orchestrates multi-step workflows toward outcomes with minimal oversight, coordinating multiple agents toward business goals

- Choosing the wrong architecture for workflow complexity creates escalation bottlenecks that compound over time

- The 2026 market is shifting from isolated agents to coordinated agentic systems, making architectural decisions particularly consequential

Got Questions? We’ve Got Answers

Agentic systems require outcome-level governance rather than just task-level rules. This includes policy frameworks defining what the system can decide autonomously versus what requires human approval, with specific parameters for refund limits, discount authority, escalation triggers for sensitive situations, and compliance requirements that vary by jurisdiction or customer segment.

The system should provide clear reasoning for decisions in audit mode, enabling quality assurance teams to verify the AI applied correct policies and used appropriate data to reach conclusions.

Start with workflow analysis. High-volume simple requests benefit from agent automation for fast wins.

Complex multi-step resolutions require agentic architecture for transformational outcomes. Most mature stacks layer both: agents handling tier-one tasks, agentic orchestration resolving complex workflows, humans focusing on situations genuinely requiring judgment.

Well-designed agentic systems recognize the boundaries of their knowledge and authority rather than forcing resolution attempts on unfamiliar situations.

When encountering novel scenarios, the system should gather relevant information, identify which aspects fall within its capabilities, execute what it can, and escalate the remainder with full context so human agents don't start from scratch.

The learning layer then incorporates these cases to expand future coverage, either through policy updates that address the new scenario type or by flagging patterns that indicate need for new agent capabilities.

Agent performance is best measured by task completion accuracy, response time, and escalation rate for the specific functions they handle.

Agentic AI performance should be measured by true resolution rate, meaning tickets where the customer's problem was genuinely solved without human intervention, along with customer satisfaction scores and the complexity of workflows handled autonomously. Containment rate alone can be misleading because it often measures deflection rather than resolution.

.png)

.png)

.png)

.jpg)

.png)

.jpg)

.png)